Parent-child pipelines (FREE)

Introduced in GitLab 12.7.

As pipelines grow more complex, a few related problems start to emerge:

- The staged structure, where all steps in a stage must be completed before the first job in next stage begins, causes arbitrary waits, slowing things down.

- Configuration for the single global pipeline becomes very long and complicated, making it hard to manage.

- Imports with

includeincrease the complexity of the configuration, and create the potential for namespace collisions where jobs are unintentionally duplicated. - Pipeline UX can become unwieldy with so many jobs and stages to work with.

Additionally, sometimes the behavior of a pipeline needs to be more dynamic. The ability to choose to start sub-pipelines (or not) is a powerful ability, especially if the YAML is dynamically generated.

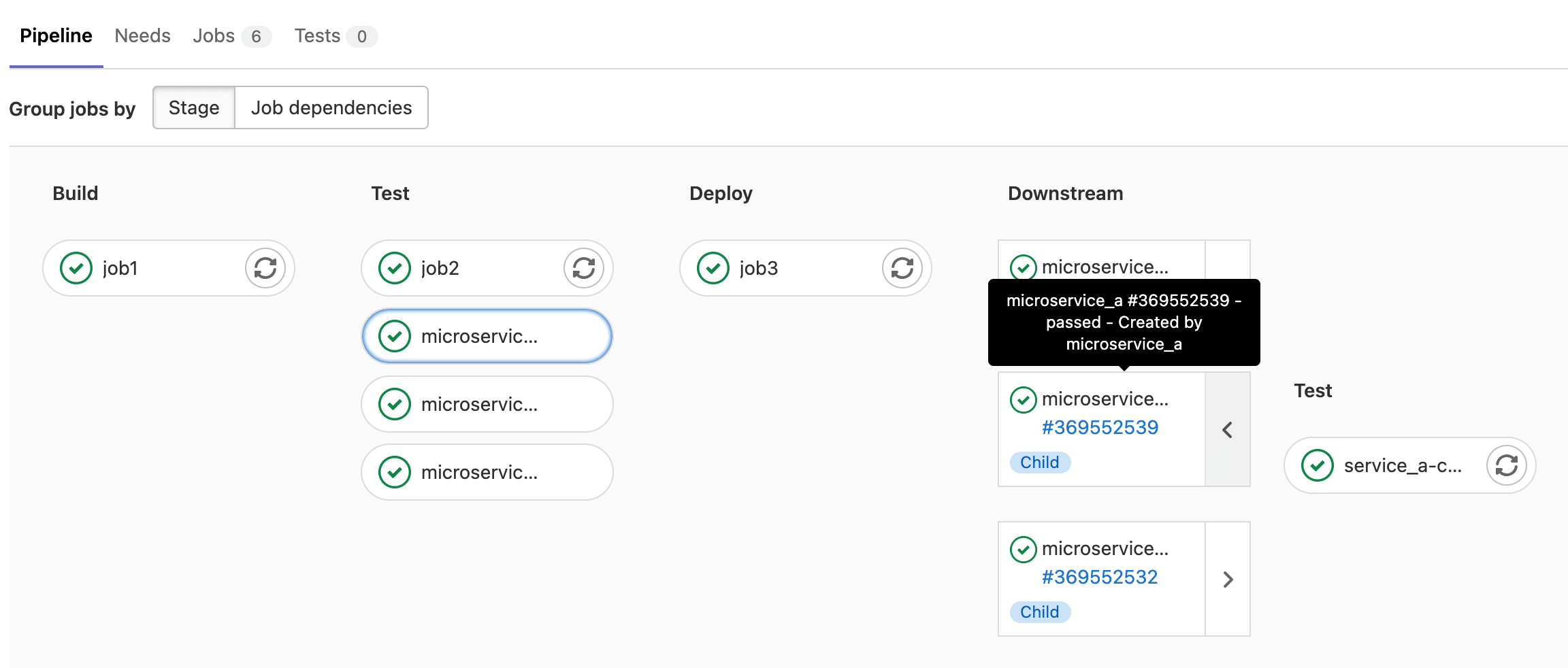

Similarly to multi-project pipelines, a pipeline can trigger a set of concurrently running child pipelines, but within the same project:

- Child pipelines still execute each of their jobs according to a stage sequence, but would be free to continue forward through their stages without waiting for unrelated jobs in the parent pipeline to finish.

- The configuration is split up into smaller child pipeline configurations. Each child pipeline contains only relevant steps which are easier to understand. This reduces the cognitive load to understand the overall configuration.

- Imports are done at the child pipeline level, reducing the likelihood of collisions.

Child pipelines work well with other GitLab CI/CD features:

- Use

rules: changesto trigger pipelines only when certain files change. This is useful for monorepos, for example. - Since the parent pipeline in

.gitlab-ci.ymland the child pipeline run as normal pipelines, they can have their own behaviors and sequencing in relation to triggers.

See the trigger keyword documentation for full details on how to

include the child pipeline configuration.

For an overview, see Parent-Child Pipelines feature demo.

Examples

The simplest case is triggering a child pipeline using a local YAML file to define the pipeline configuration. In this case, the parent pipeline triggers the child pipeline, and continues without waiting:

microservice_a:

trigger:

include: path/to/microservice_a.ymlYou can include multiple files when defining a child pipeline. The child pipeline's configuration is composed of all configuration files merged together:

microservice_a:

trigger:

include:

- local: path/to/microservice_a.yml

- template: Security/SAST.gitlab-ci.ymlIn GitLab 13.5 and later,

you can use include:file to trigger child pipelines

with a configuration file in a different project:

microservice_a:

trigger:

include:

- project: 'my-group/my-pipeline-library'

ref: 'main'

file: '/path/to/child-pipeline.yml'The maximum number of entries that are accepted for trigger:include is three.

Similar to multi-project pipelines, we can set the parent pipeline to depend on the status of the child pipeline upon completion:

microservice_a:

trigger:

include:

- local: path/to/microservice_a.yml

- template: Security/SAST.gitlab-ci.yml

strategy: dependMerge request child pipelines

To trigger a child pipeline as a merge request pipeline we need to:

- Set the trigger job to run on merge requests:

# parent .gitlab-ci.yml

microservice_a:

trigger:

include: path/to/microservice_a.yml

rules:

- if: $CI_MERGE_REQUEST_ID-

Configure the child pipeline by either:

-

Setting all jobs in the child pipeline to evaluate in the context of a merge request:

# child path/to/microservice_a.yml workflow: rules: - if: $CI_MERGE_REQUEST_ID job1: script: ... job2: script: ... -

Alternatively, setting the rule per job. For example, to create only

job1in the context of merge request pipelines:# child path/to/microservice_a.yml job1: script: ... rules: - if: $CI_MERGE_REQUEST_ID job2: script: ...

-

Dynamic child pipelines

Introduced in GitLab 12.9.

Instead of running a child pipeline from a static YAML file, you can define a job that runs your own script to generate a YAML file, which is then used to trigger a child pipeline.

This technique can be very powerful in generating pipelines targeting content that changed or to build a matrix of targets and architectures.

For an overview, see Create child pipelines using dynamically generated configurations.

We also have an example project using

Dynamic Child Pipelines with Jsonnet

which shows how to use a data templating language to generate your .gitlab-ci.yml at runtime.

You could use a similar process for other templating languages like

Dhall or ytt.

The artifact path is parsed by GitLab, not the runner, so the path must match the

syntax for the OS running GitLab. If GitLab is running on Linux but using a Windows

runner for testing, the path separator for the trigger job would be /. Other CI/CD

configuration for jobs, like scripts, that use the Windows runner would use \.

In GitLab 12.9, the child pipeline could fail to be created in certain cases, causing the parent pipeline to fail. This is resolved in GitLab 12.10.

Dynamic child pipeline example

Introduced in GitLab 12.9.

You can trigger a child pipeline from a dynamically generated configuration file:

generate-config:

stage: build

script: generate-ci-config > generated-config.yml

artifacts:

paths:

- generated-config.yml

child-pipeline:

stage: test

trigger:

include:

- artifact: generated-config.yml

job: generate-configThe generated-config.yml is extracted from the artifacts and used as the configuration

for triggering the child pipeline.

Nested child pipelines

- Introduced in GitLab 13.4.

- Feature flag removed in GitLab 13.5.

Parent and child pipelines were introduced with a maximum depth of one level of child pipelines, which was later increased to two. A parent pipeline can trigger many child pipelines, and these child pipelines can trigger their own child pipelines. It's not possible to trigger another level of child pipelines.

For an overview, see Nested Dynamic Pipelines.

Pass CI/CD variables to a child pipeline

You can pass CI/CD variables to a downstream pipeline using the same methods as multi-project pipelines: